1st Workshop on

Visualization for AI Explainability

October 22, 2018 at IEEE VIS in Berlin, Germany

The role of visualization in artificial intelligence (AI) gained significant attention in recent years. With the growing complexity of AI models, the critical need for understanding their inner-workings has increased. Visualization is potentially a powerful technique to fill such a critical need.

The goal of this workshop is to initiate a call for 'explainables' / 'explorables' that explain how AI techniques work using visualization. We believe the VIS community can leverage their expertise in creating visual narratives to bring new insight into the often obfuscated complexity of AI systems.

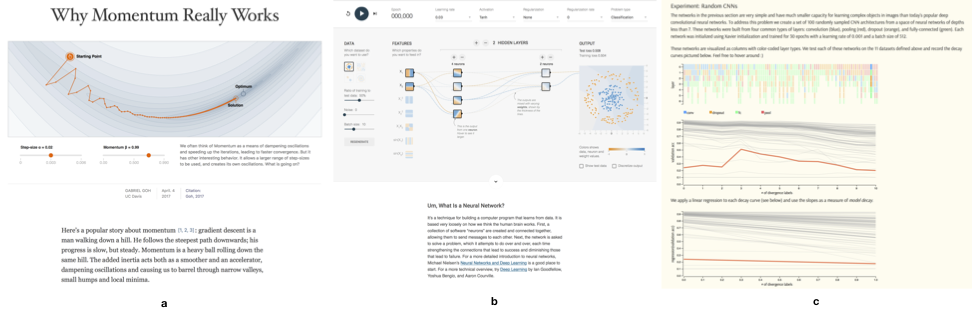

- (a) Why Momentum Really Works by Gabriel Goh

- (b) Tensorflow Playground by Smilkov, Carter, et.al.

- (c) FormaFluens Data Experiment by Strobelt, Phibbs, Martino

Important Dates

Program Overview

All times in ET (UTC -5).

| 2:20 -- 2:25 | Welcome from the Organizers |

| 2:25 -- 3:10 | Keynote: Been Kim (Google Brain) Towards Interpretability for Everyone: Testing with Concept Activation Vectors (TCAV) - The ultimate goal of interpretability is to help users gain insights into the model for more responsible use of ML. Unlike the majority of subfields in ML, interpretable ML requires studying how humans parse complex information and exploring effective ways to communicate such information. This human aspect becomes even more critical when developing interpretability methods for non-ML experts/layer users --- my core research agenda. |

| 3:10 -- 3:35 | Session I: Neural Networks and Deep Learning Visualising State Space Representations of Long Short-Term Memory Networks Emmanuel M. Smith, Jim Smith, Phil Legg and Simon Francis Visualizing neuron activations of neural networks with the grand tour Mingwei Li, Zhenge Zhao and Carlos Scheidegger |

| 3:35 -- 4:00 | Session II: Projections and Dimensionality Reduction Roads from Above Greg More, Slaven Marusic and Caihao Cui The Beginner's Guide to Dimensionality Reduction Matthew Conlen and Fred Hohman Dimension, Distances, or Neighborhoods? Projection Literacy for the Analysis of Multivariate Data Dirk Streeb, Rebecca Kehlbeck, Dominik Jäckle and Mennatallah El-Assady |

| 4:00 -- 4:20 | Coffee Break with Poster Session |

| 4:20 -- 4:45 | Session III: Data Distribution and Bias A Visual Exploration of Gaussian Processes Jochen Görtler, Rebecca Kehlbeck and Oliver Deussen Towards an Interpretable Latent Space Thilo Spinner, Jonas Körner, Jochen Görtler and Oliver Deussen Understanding Bias in Machine Learning Jindong Gu and Daniela Oelke |

| 4:45 -- 5:10 | Session IV: Machine Learning Processes and Explanation Strategies Minions, Sheep, and Fruits: Metaphorical Narratives to Explain Artificial Intelligence and Build Trust Wolfgang Jentner, Rita Sevastjanova, Florian Stoffel, Daniel Keim, Jurgen Bernard and Mennatallah El-Assady Aimacode Javascript - Minimax Michael Kawano Going beyond Visualization: Verbalization as Complementary Medium to Explain Machine Learning Models Rita Sevastjanova, Fabian Beck, Basil Ell, Cagatay Turkay, Rafael Henkin, Miriam Butt, Daniel Keim and Mennatallah El-Assady |

| 5:10 -- 5:55 | Moderated Panel Discussion |

| 5:55 -- 6:00 | Best submission ceremony and "Auf Wiedersehen" :) |

| 8:00 | VISxAI Eastcoast party |

Hall of Fame

Each year we award Best Submissions and Honorable Mentions. Congrats to our winners!