4th Workshop on

Visualization for AI Explainability

October 25th, 2021 at IEEE VIS in Virtual (originally New Orleans, Louisiana)

The role of visualization in artificial intelligence (AI) gained significant attention in recent years. With the growing complexity of AI models, the critical need for understanding their inner-workings has increased. Visualization is potentially a powerful technique to fill such a critical need.

The goal of this workshop is to initiate a call for 'explainables' / 'explorables' that explain how AI techniques work using visualization. We believe the VIS community can leverage their expertise in creating visual narratives to bring new insight into the often obfuscated complexity of AI systems.

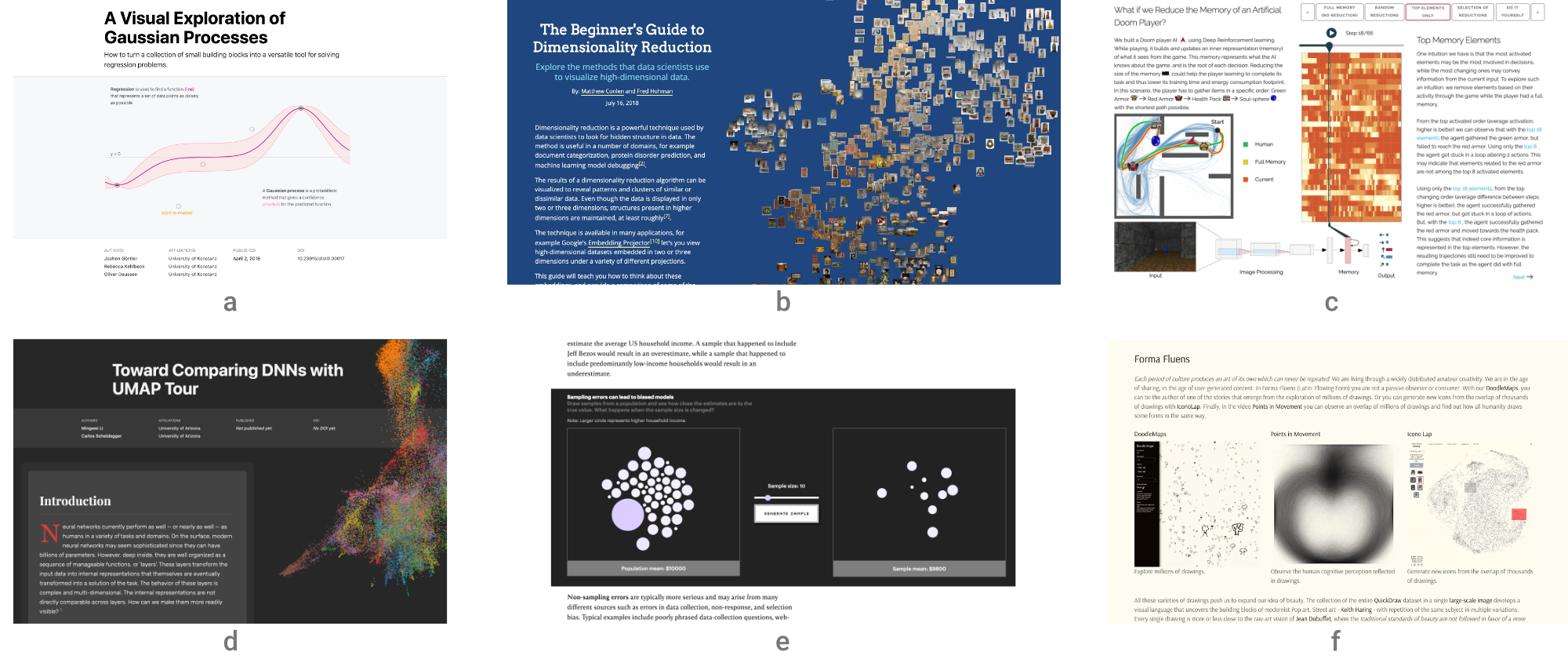

- (a) A Visual Exploration of Gaussian Processes by Görtler, Kehlbeck, and Deussen (VISxAI 2018)

- (b) The Beginner's Guide to Dimensionality Reduction by Conlen and Hohman (VISxAI 2018)

- (c) What if we Reduce the Memory of an Artificial Doom Player? by Jaunet, Vuillemot, and Wolf (VISxAI 2019)

- (d) Comparing DNNs with UMAP Tour by Li and Scheidegger (VISxAI 2020)

- (e) The Myth of the Impartial Machine by Feng and Wu

- (f) FormaFluens Data Experiment by Strobelt, Phibbs, and Martino

Important Dates

Program Overview

All times in ET (UTC -5).

| 12:00 -- 12:05 | Welcome from the Organizers |

| 12:05 -- 1:00 | Keynote: David Ha (Google) - @hardmaru Using the Webpage as the Main Medium for Communicating Research Ideas |

| 1:00 -- 1:30 | Session I What Have Language Models Learned? Adam Pearce Feature Sonification: An investigation on the features learned for Automatic Speech Recognition Amin Ghiasi, Hamid Kazemi, W. Ronny Huang, Emily Liu, Micah Goldblum, Tom Goldstein Interactive Similarity Overlays Ruth Fong, Alexander Mordvintsev, Andrea Vedaldi, Chris Olah |

| 1:30 -- 2:00 | Break |

| 2:00 -- 2:30 | Session II An Interactive Introduction to Model-Agnostic Meta-Learning Luis Müller, Max Ploner, Thomas Goerttler, Klaus Obermayer Demystifying the Embedding Space of Language Models Rebecca Kehlbeck, Rita Sevastjanova, Thilo Spinner, Tobias Stähle, Mennatallah El-Assady Backprop Explainer: An Explanation with Interactive Tools Donald Bertucci, Minsuk Kahng |

| 2:30 -- 2:35 | Project Pitch Videos |

| 2:35 -- 3:05 | Session III (Un)Fair Machine Vu Luong Amazon's MLU-Explain: Interactive Explanations of Core Machine Learning Concepts Jared Wilber, Jenny Yeon, Brent Werness Exploring Hidden Markov Model Rithwik Kukunuri, Rishiraj Adhikary, Mahika Jaguste, Nipun Batra, Ashish Tendulkar |

| 3:05 -- 3:10 | Closing Session |

| 3:10 -- 5:00 | VISxAI Eastcoast Party |

Hall of Fame

Each year we award Best Submissions and Honorable Mentions. Congrats to our winners!