7th Workshop on

Visualization for AI Explainability

October 13, 2024 at IEEE VIS in St. Pete Beach, Florida

The role of visualization in artificial intelligence (AI) gained significant attention in recent years. With the growing complexity of AI models, the critical need for understanding their inner-workings has increased. Visualization is potentially a powerful technique to fill such a critical need.

The goal of this workshop is to initiate a call for 'explainables' / 'explorables' that explain how AI techniques work using visualization. We believe the VIS community can leverage their expertise in creating visual narratives to bring new insight into the often obfuscated complexity of AI systems.

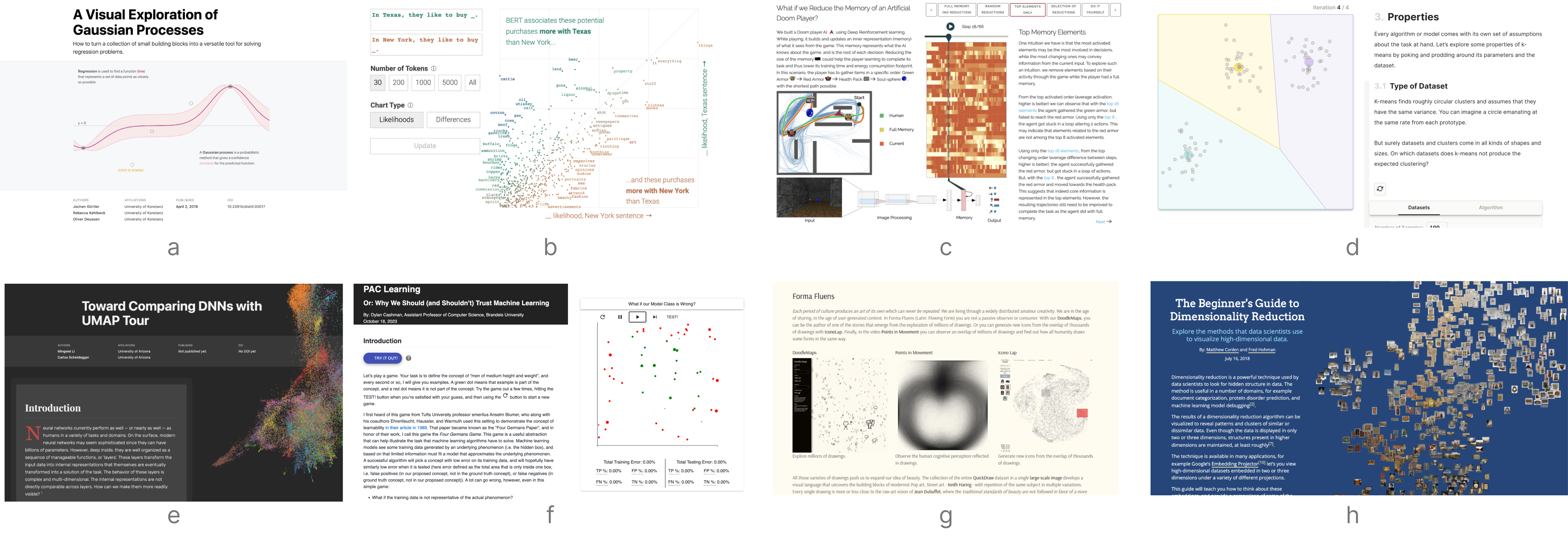

- (a) A Visual Exploration of Gaussian Processes by Görtler, Kehlbeck, and Deussen (VISxAI 2018)

- (b) What Have Language Models Learned? by Adam Pearce (VISxAI 2021)

- (c) What if we Reduce the Memory of an Artificial Doom Player? by Jaunet, Vuillemot, and Wolf (VISxAI 2019)

- (d) K-Means Clustering: An Explorable Explainer by Yi Zhe Ang (VISxAI 2022)

- (e) Comparing DNNs with UMAP Tour by Li and Scheidegger (VISxAI 2020)

- (f) PAC Learning Or: Why We Should (and Shouldn't) Trust Machine Learning by Cashman (VISxAI 2023)

- (g) FormaFluens Data Experiment by Strobelt, Phibbs, and Martino

- (h) The Beginner's Guide to Dimensionality Reduction by Conlen and Hohman (VISxAI 2018)

Important Dates

Program Overview

All times in ET (UTC -5).

| 8:30am | Welcome from the Organizers |

Session I (75 minutes) | |

| 8:35 -- 9:15 | Opening Keynote: David Bau - @davidbau Resilience and Human Understanding in AI - What is the role of human understanding in AI? As increasingly massive AI systems are deployed into an unpredictable and complex world, interpretability and controllability are the keys to achieving resilience. We discuss results in understanding and editing large-scale transformer language models and diffusion image synthesis models, and how these are part of an emerging research agenda in interpretable generative AI. Finally, we talk about the concentration of power that is emerging due to the scaling up of large-scale AI, and the kind of infrastructure that will be needed to ensure broad and democratized human participation in the future of AI. |

| 9:15 -- 9:45 | Lightning Talks I Can Large Language Models Explain Their Internal Mechanisms? Nada Hussein, Asma Ghandeharioun, Ryan Mullins, Emily Reif, Jimbo Wilson, Nithum Thain, Lucas Dixon Explaining Text-to-Command Conversational Models Petar Stupar, Prof. Dr. Gregory Mermoud, Jean-Philippe Vasseur TalkToRanker: A Conversational Interface for Ranking-based Decision-Making Conor Fitzpatrick, Jun Yuan, Aritra Dasgupta Where is the information in data? Kieran Murphy, Dani S. Bassett Explainability Perspectives on a Vision Transformer: From Global Architecture to Single Neuron Anne Marx, Yumi Kim, Luca Sichi, Diego Arapovic, Javier Sanguino Bautiste, Rita Sevastjanova, Mennatallah El-Assady |

| 9:45 -- 10:15 | Break |

Session II (75 minutes) | |

| 10:15 -- 10:45 | Lightning Talks II The Illustrated AlphaFold Elana P Simon, Jake Silberg A Visual Tour to Empirical Neural Network Robustness Chen Chen, Jinbin Huang, Ethan M Remsberg, Zhicheng Liu Panda or Gibbon? A Beginner's Introduction to Adversarial Attacks Yuzhe You, Jian Zhao What Can a Node Learn from Its Neighbors in Graph Neural Networks? Yilin Lu, Chongwei Chen, Matthew Xu, Qianwen Wang ExplainPrompt: Decoding the language of AI prompts Shawn Simister Inside an interpretable-by-design machine learning model: enabling RNA splicing rational design Mateus Silva Aragao, Shiwen Zhu, Nhi Nguyen, Alejandro Garcia, Susan Elizabeth Liao |

| 10:45 -- 11:30 | Closing Keynote: Adam Pearce - @adamrpearce Why Aren't We Using Visualizations to Interact with AI? - Well-crafted visualizations are the highest bandwidth way of downloading information into our brains. As complex machine learning models become increasingly useful and important, can we move beyond mostly using text to understand and engage with them? |

| 11:30am | Closing |

Hall of Fame

Each year we award Best Submissions and Honorable Mentions. Congrats to our winners!