8th Workshop on

Visualization for AI Explainability

November 2, 2025 at IEEE VIS in Vienna, Austria

The role of visualization in artificial intelligence (AI) gained significant attention in recent years. With the growing complexity of AI models, the critical need for understanding their inner-workings has increased. Visualization is potentially a powerful technique to fill such a critical need.

The goal of this workshop is to initiate a call for "explainables" / "explorables" that explain how AI techniques work using visualization. We believe the VIS community can leverage their expertise in creating visual narratives to bring new insight into the often obfuscated complexity of AI systems.

Important Dates

July 30, 2025August 6, 2025, anywhere: Submission Deadline September 8, 2025: Author Notification October 1, 2025: Camera Ready Deadline

Program Overview

All times in CET (UTC +1).

| 9:00am | Welcome from the Organizers |

| 9:10 -- 10:30 | Session I: Lightning Talks

Learning as Choosing a Loss Distribution -- Matthew J Holland LIME and SHAP Explained: From Computation to Interpretation -- Aeri Cho, Jeongmin Rhee, Seokhyeon Park, Jinwook Seo Transformer Explainer: LLM Transformer Model Visually Explained -- Cho, Aeree, Kim, Grace C., Karpekov, Alexander, Helbling, Alec, Wang, Zijie J., Lee, Seongmin, Hoover, Benjamin, Chau, Duen Horng The Mystery of In-Context Learning: How Transformers Learn Patterns -- Sundara Srivathsan, Lighittha PR, Prithivraj S, Suganya Ramamoorthy ICL‑Scope: Peering Inside In‑Context Learning with Real‑Time Interactive Visualisation -- Bhaskarjit Sarmah, Reetu Raj Harsh ESCAPE - Explaining Stable Diffusion via Cross Attention Maps and Prompt Editing -- Diego Zafferani, Giovanni De Muri, Johanna Hedlund Lindmar, Akmal Ashirmatov, Sinie van der Ben, Rita Sevastjanova, Mennatallah El-Assady Patch Explorer -- Imke Grabe, Jaden Fiotto-Kaufman, Rohit Gandikota, David Bau GFlowNet Playground - Theory and Examples for an Intuitive Understanding -- Florian Holeczek, Alexander Hillisch, Andreas Hinterreiter, Alex Hernández-García, Marc Streit, Christina Humer The Illustrated Evo2 -- Jared Wilber, Farhad Ramezanghorbani, Tyler Carter Shimko, John St John, David Romero |

| 10:30 -- 11:00 | Break |

| 11:00 -- 12:30 | Session II: VISxAI Unconf

Come by to discuss what's hot in VIS + AI, meet new people, and build community! 1 hour: break outs. 30min: share outs. |

| 12:30 -- 2:00 | Lunch Break |

| 2:00 -- 3:30 | Session III: Fireside Chat (with original VISxAI Organizers!): Hendrik Strobelt, Adam Perer, Menna El-Assady

How has the intersection of visualization and machine learning changed since the first VISxAI (2018)? |

| 3:30 -- 4:00 | Break |

| 4:00 -- 5:30 | Session IV: Closing Keynote: Martin Wattenberg - @wattenberg |

| 5:30 | Closing |

Call for Participation

SUBMISSION CLOSED

To make our work more accessible to the general audience, we are soliciting submissions in a novel format: blog-style posts and jupyter-like notebooks. In addition we also accept position papers in a more traditional form. Please contact us, if you want to submit a original work in another format. Email: orga.visxai at gmail.com

Explainable submissions (e.g., interactive articles, markup, and notebooks) are the core element of the workshop, as this workshop aims to be a platform for explanatory visualizations focusing on AI techniques.

Authors have the freedom to use whatever templates and formats they like. However, the narrative has to be visual and interactive, and walk readers through a keen understanding on the ML technique or application. Authors may wish to write a Distill-style blog post (format), interactive Idyll markup, or a Jupyter or Observable notebook that integrates code, text, and visualization to tell the story.

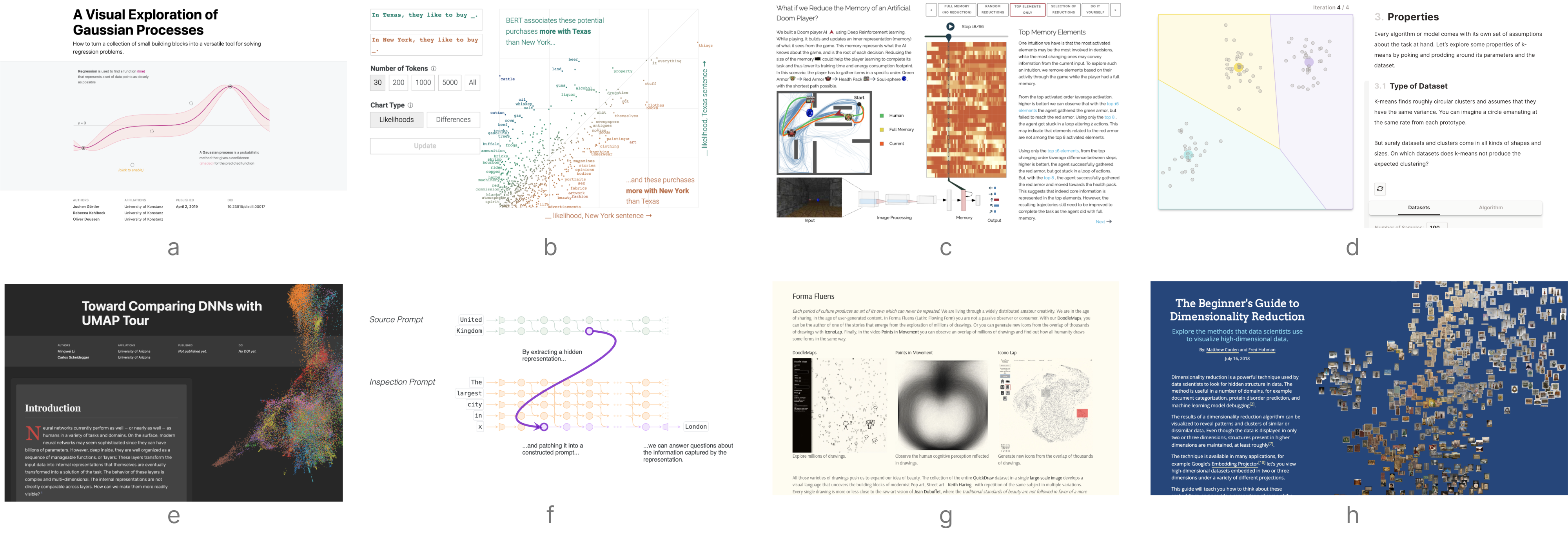

Here are a few examples of visual explanations of AI methods in these types of formats:

- [interactive article] A Visual Exploration of Gaussian Processes

- [interactive article] Why Momentum Really Works

- [interactive article] A Visual Introduction to Machine Learning

- [interactive article] Art-Inspired Data Experiments on Neural Network Model Decay

- [interactive article] Attacking Discrimination with Smarter Machine Learning

- [markup] The Myth of the Impartial Machine

- [markup] The Beginner's Guide to Dimensionality Reduction

- [notebook] t-SNE Explained in Plain JavaScript

- [notebook] How to build a Teachable Machine with TensorFlow.js

- [notebook] Titanic Machine Learning from Disaster

While these examples are informative and excellent, we hope the Visualization & ML community will think about ways to creatively expand on such foundational work to explain AI methods using novel interactions and visualizations often present at IEEE VIS. Please contact us, if you want to submit a original work in another format. Email: orga.visxai (at) gmail.com.

Note: We also accept more traditional papers that accompany an explainable. Be aware that we require that the explainable must stand on its own. The reviewers will evaluate the explainable (and might chose to ignore the paper).

Hall of Fame

Each year we award Best Submissions and Honorable Mentions. Congrats to our winners!VISxAI 2024

- Can Large Language Models Explain Their Internal Mechanisms? -- Nada Hussein, Asma Ghandeharioun, Ryan Mullins, Emily Reif, Jimbo Wilson, Dr. Nithum Thain, Dr Lucas Dixon

- The Illustrated AlphaFold -- Elana P Simon, Jake Silberg

VISxAI 2023

- PAC Learning Or: Why We Should (and Shouldn't) Trust Machine Learning -- Dylan Cashman

- Understanding and Comparing Multi-Modal Models -- Christina Humer, Vidya Prasad, Marc Streit, Hendrik Strobelt

- Do Machine Learning Models Memorize or Generalize? -- Adam Pearce, Asma Ghandeharioun, Nada Hussein, Nithum Thain, Martin Wattenberg, Lucas Dixon

VISxAI 2022

- K-Means Clustering: An Explorable Explainer -- Yi Zhe Ang

- Poisoning Attacks and Subpopulation Susceptibility -- Evan Rose, Fnu Suya, David Evans

VISxAI 2021

- What Have Language Models Learned? -- Adam Pearce

- Feature Sonification: An investigation on the features learned for Automatic Speech Recognition -- Amin Ghiasi, Hamid Kazemi, W. Ronny Huang, Emily Liu, Micah Goldblum, Tom Goldstein

VISxAI 2020

- Comparing DNNs with UMAP Tour -- Mingwei Li and Carlos Scheidegger

- How Does a Computer "See" Gender? -- Stefan Wojcik, Emma Remy, and Chris Baronavski

VISxAI 2019

- What if we Reduce the Memory of an Artificial Doom Player? -- Theo Jaunet, Romain Vuillemot, and Christian Wolf

- Statistical Distances and Their Implications to GAN Training -- Max Daniels

- Selecting the right tool for the job: a comparison of visualization algorithms -- Daniel Burkhardt, Scott Gigante, and Smita Krishnaswamy

VISxAI 2018

- A Visual Exploration of Gaussian Processes -- Jochen Görtler, Rebecca Kehlbeck and Oliver Deussen

- The Beginner's Guide to Dimensionality Reduction -- Matthew Conlen and Fred Hohman

- Roads from Above -- Greg More, Slaven Marusic and Caihao Cui

Organizers (alphabetic)

Alex Bäuerle - Google DeepMind

Angie Boggust - Massachusetts Institute of Technology

Catherine Yeh - Harvard University

Fred Hohman - Apple

Steering Committee

Adam Perer - Carnegie Mellon University

Hendrik Strobelt - MIT-IBM Watson AI Lab

Mennatallah El-Assady - ETH AI Center

Program Committee and Reviewers

Coming soon!